Refilming with Depth-Inferred Videos

Guofeng Zhang1 Zilong Dong1 Jiaya Jia2 Liang Wan2 Tien-Tsin Wong2 Hujun Bao1

1State Key Lab of CAD&CG, Zhejiang University 2The Chinese University of Hong Kong

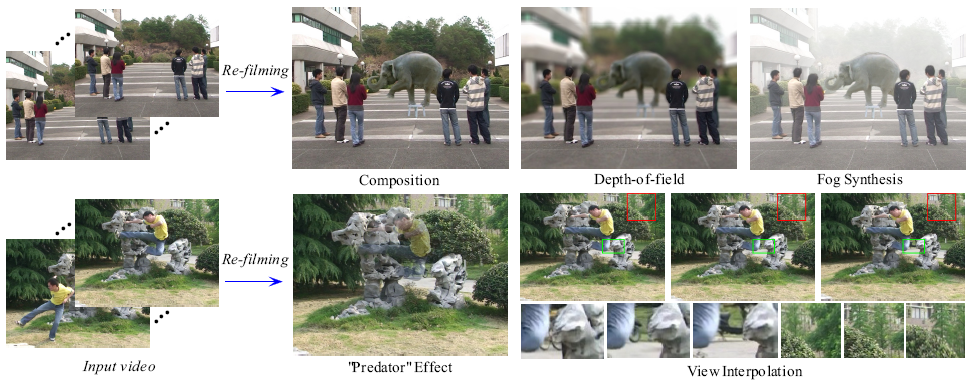

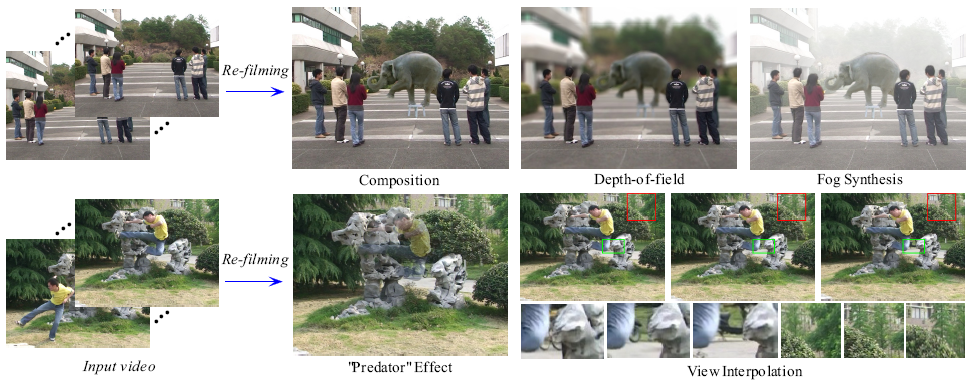

Abstract-Compared to still image editing, content-based video editing faces the additional challenges of maintaining the spatio-temporal consistency with respect to geometry. This brings up difficulties of seamlessly modifying video content, for instance, inserting or removing an object. In this paper, we present a new video editing system for creating spatio-temporally consistent and visually appealing re-filming effects. Unlike the typical filming practice, our system requires no labor-intensive construction of 3D models/surfaces mimicking the real scene. Instead, it is based on an unsupervised inference of view-dependent depth maps for all video frames. We provide interactive tools requiring only a small amount of user input to perform elementary video content editing, such as separating video layers, completing background scene, and extracting moving objects. These tools can be utilized to produce a variety of visual effects in our system, including but not limited to video composition, ``predator'' effect, bullet-time, depth-of-field, and fog synthesis. Some of the effects can be achieved in real-time.

Refilming with Depth-Inferred Videos

Guofeng Zhang, Zilong Dong, Jiaya Jia, Liang Wan, Tien-Tsin Wong and Hujun Bao

IEEE Transactions on Visualization and Computer Graphics (TVCG),

15(5):828-840,2009.

BibTex:

@article{Zhang_refilming_tvcg09,

author = {Guofeng Zhang and Zilong Dong and Jiaya Jia and Liang Wan and Tien-Tsin Wong and Hujun Bao},

title = {Refilming with Depth-Inferred Videos},

journal ={IEEE Transactions on Visualization and Computer Graphics},

volume = {15},

number = {5},

year = {2009},

pages = {828-840},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

}

Result Movie

Refilming with Depth-Inferred Videos

|

|