Learning Multi-domain Networks for Real-time Person Foreground Segmentation

IEEE Transactions on Image Processing, 2022, 31: 585-597.

Zhiyuan Liang, Kan Guo, Xiaobo Li, Xiaogang Jin, and Jianbing Shen

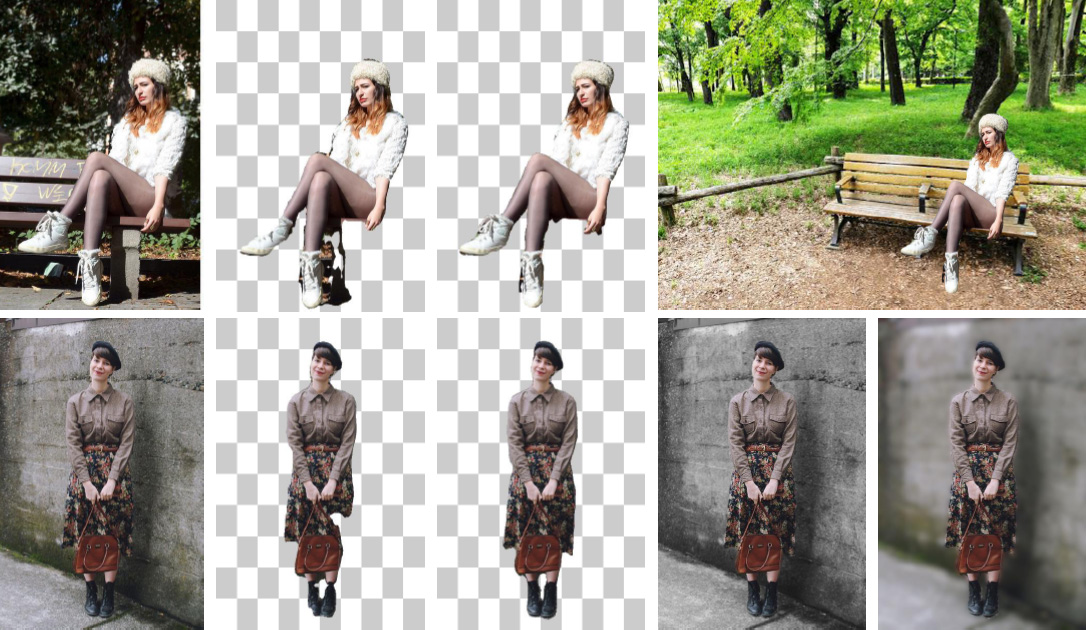

Examples of the photo-editing applications benefiting from the single person segmentation. From left to right, column (a) shows input images, (b) shows segmentation results of BiSeNet [36], (c) shows the results of our approach, and (d) shows the edited images based on our segmentation results.

Abstract

Separating the dominant

person from the complex background is significant to the human-related

research and photo-editing based applications. Existing segmentation

algorithms are either too general to separate the person region accurately,

or not capable of achieving real-time speed. In this paper, we introduce the

multi-domain learning framework into a novel baseline model to construct the

Multi-domain TriSeNet Networks for the real-time single person image

segmentation. We first divide training data into different subdomains based

on the characteristics of single person images, then apply a multibranch

Feature Fusion Module (FFM) to decouple the networks into the

domain-independent and the domain-specific layers. To further enhance the

accuracy, a self-supervised learning strategy is proposed to dig out domain

relations during training. It helps transfer domain-specific knowledge by

improving predictive consistency among different FFM branches. Moreover, we

create a large-scale single person image segmentation dataset named MSSP20k,

which consists of 22,100 pixel-level annotated images in the real world. The

MSSP20k dataset is more complex and challenging than existing public ones in

terms of scalability and variety, which benefits much to human segmentation

research. Experiments show that our Multi-domain TriSeNet outperforms

state-of-the-art approaches on both public and the newly built datasets with

real-time speed.