Real-time Controllable Motion Transition for Characters

ACM Transactions on Graphics (Proc. Siggraph 2022), 2022, 41(4): Article No.: 137.

Xiangjun Tang, He Wang, Bo Hu, Xu Gong, Ruifan Yi, Qilong Kou, Xiaogang Jin

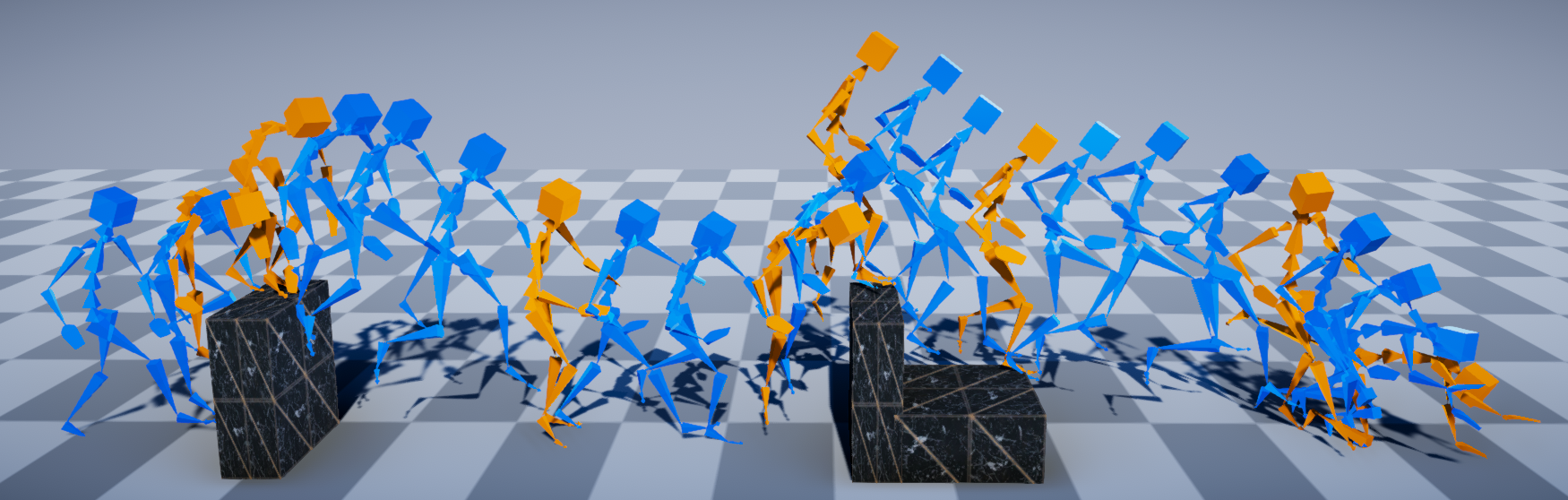

In-between motion sequences (blue) between target frames (orange) generated by our method. Given a target frame and a desired transition duration, the controlled character can dynamically adjust strategies, e.g., different step sizes, velocities, or motion types, to reach the target without visual artifacts.

Abstract

Real-time in-between

motion generation is universally required in games and highly desirable in

existing animation pipelines. Its core challenge lies in the need to satisfy

three critical conditions simultaneously: quality, controllability and

speed, which renders any methods that need offline computation (or

post-processing) or cannot incorporate (often unpredictable) user control

undesirable. To this end, we propose a new real-time transition method to

address the aforementioned challenges. Our approach consists of two key

components: motion manifold and conditional transitioning. The former learns

the important low-level motion features and their dynamics; while the latter

synthesizes transitions conditioned on a target frame and the desired

transition duration. We first learn a motion manifold that explicitly models

the intrinsic transition stochasticity in human motions via a multi-modal

mapping mechanism. Then, during generation, we design a transition model

which is essentially a sampling strategy to sample from the learned

manifold, based on the target frame and the aimed transition duration. We

validate our method on different datasets in tasks where no post-processing

or offline computation is allowed. Through exhaustive evaluation and

comparison, we show that our method is able to generate high-quality motions

measured under multiple metrics. Our method is also robust under various

target frames (with extreme cases).

Download

| PDF, 2.27MB | Video, 68.1 MB |

Acknowledgments: The authors would like to thank Sammi (Xia Lin), Eric (Ma Bingbing), Eason (Yang Xiajun), and Rambokou (Kou Qilong) from Tencent Institute of Games for contributing useful data and relevant application demonstration assistance during the paper's preparation, which provided significant support for the paper's completion.