A Locality-Based Neural Solver for Optical Motion Capture

Proceedings of Siggraph Asia'2023, Article No.: 117, pp 1–11, Sydney, Australia, 12-15 December, 2023.

Xiaoyu Pan, Bowen Zheng, Xinwei Jiang, Guanglong Xu, Xianli Gu, Jingxiang Li, Qilong Kou, He Wang, Tianjia Shao, Kun Zhou, Xiaogang Jin

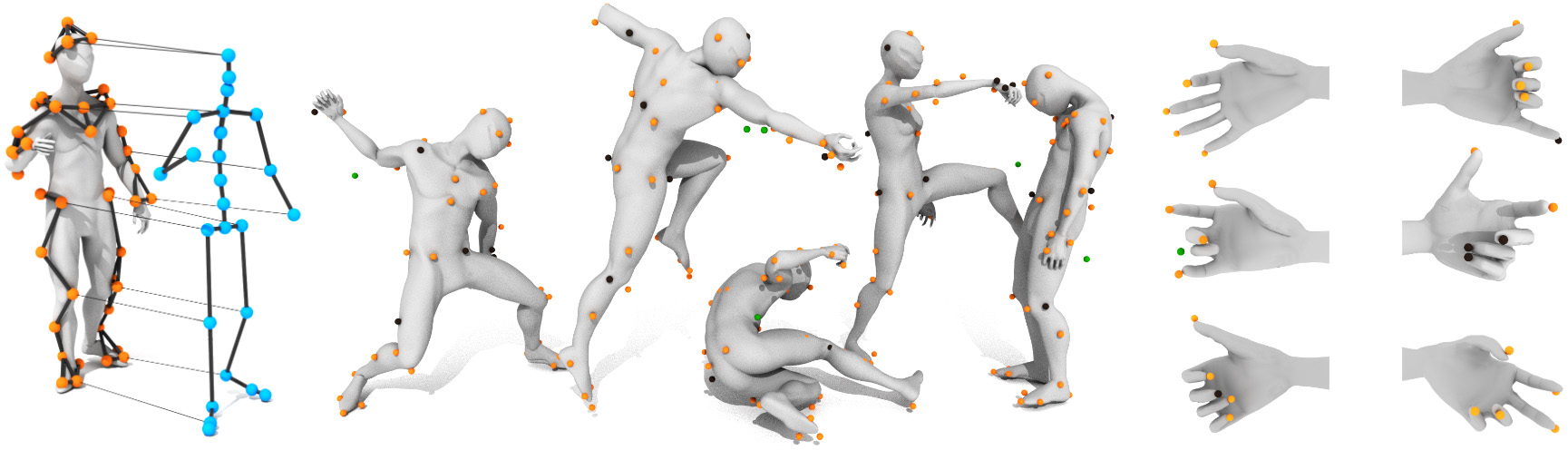

Given raw MoCap data, our method constructs a heterogeneous graph neural network (left) to solve large body motions (middle) and fine multi-fingered hand motions (right). Our method is robust to occlusions (black balls) and outliers (green balls).

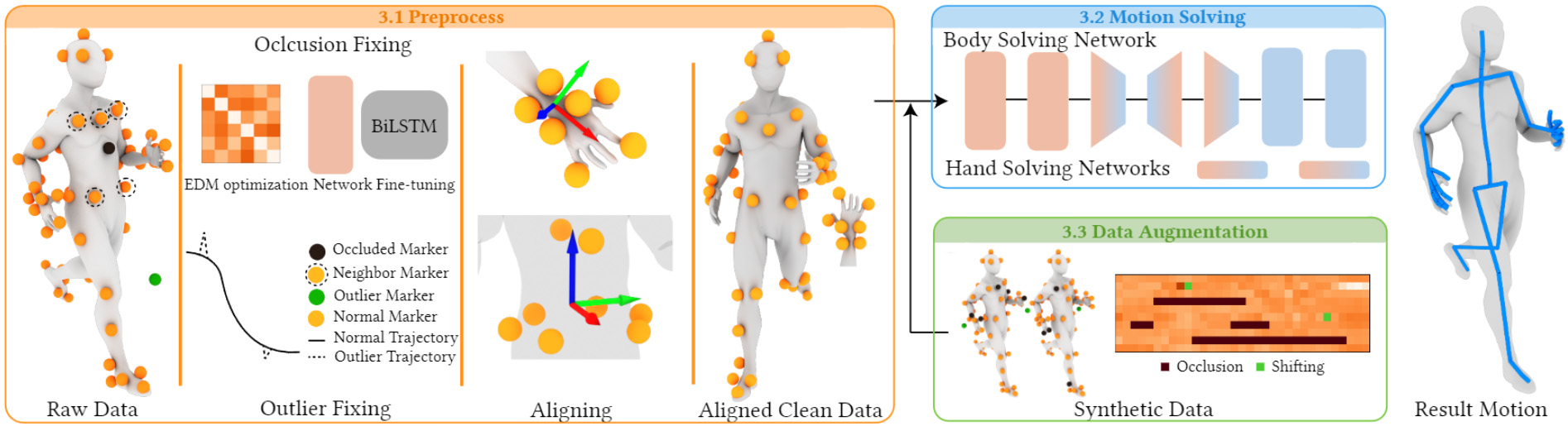

The pipeline of our method consists of three main modules. Left: Given the raw MoCap data, we clean the data by fixing occluded markers with the EDM algorithm with a bi-directional neural network and identifying outliers by locating the peaks on the marker acceleration curve, and remove the global transformation of body and hand markers by explicitly calculating their local coordinates using waist and wrist markers. Top right: Using the aligned clean body markers, we solve the body and hand motions separately using heterogeneous graph neural networks, whose details are shown in Fig. 3. Bottom right: We augment our dataset by randomly sampling gaps and adding shifting based on actual MoCap data.

Abstract

We present a novel

locality-based learning method for cleaning and solving optical motion

capture data. Given noisy marker data, we propose a new heterogeneous graph

neural network which treats markers and joints as different types of nodes,

and uses graph convolution operations to extract the local features of

markers and joints and transform them to clean motions. To deal with anomaly

markers (e.g. occluded or with big tracking errors), the key insight is that

a marker’s motion shows strong correlations with the motions of its

immediate neighboring markers but less so with other markers, a.k.a.

locality, which enables us to efficiently fill missing markers (e.g. due to

occlusion). Additionally, we also identify marker outliers due to tracking

errors by investigating their acceleration profiles. Finally, we propose a

training regime based on representation learning and data augmentation, by

training the model on data with masking. The masking schemes aim to mimic

the occluded and noisy markers often observed in the real data. Finally, we

show that our method achieves high accuracy on multiple metrics across

various datasets. Extensive comparison shows our method outperforms

state-of-the-art methods in terms of prediction accuracy of occluded marker

position error by approximately 20%, which leads to a further error

reduction on the reconstructed joint rotations and positions by 30%. The

code and data for this paper are available at

github.com/localmocap/LocalMoCap.

Download

| PDF, 31.3MB | Video, 233 MB | Source Codes and Data on GitHub |