RSMT: Real-time Stylized Motion Transition for Characters

In Special Interest Group on Computer Graphics and Interactive Techniques Conference Proceedings (SIGGRAPH '23 Conference Proceedings)

August 06–10, 2023, Los Angeles, CA, USA. ACM, New York, NY, USA, Article No.: 61, Pages 1–10.

Xiangjun Tang, Linjun Wu, He Wang, Bo Hu, Xu Gong, Yuchen Liao, Songnan Li, Qilong Kou, Xiaogang Jin

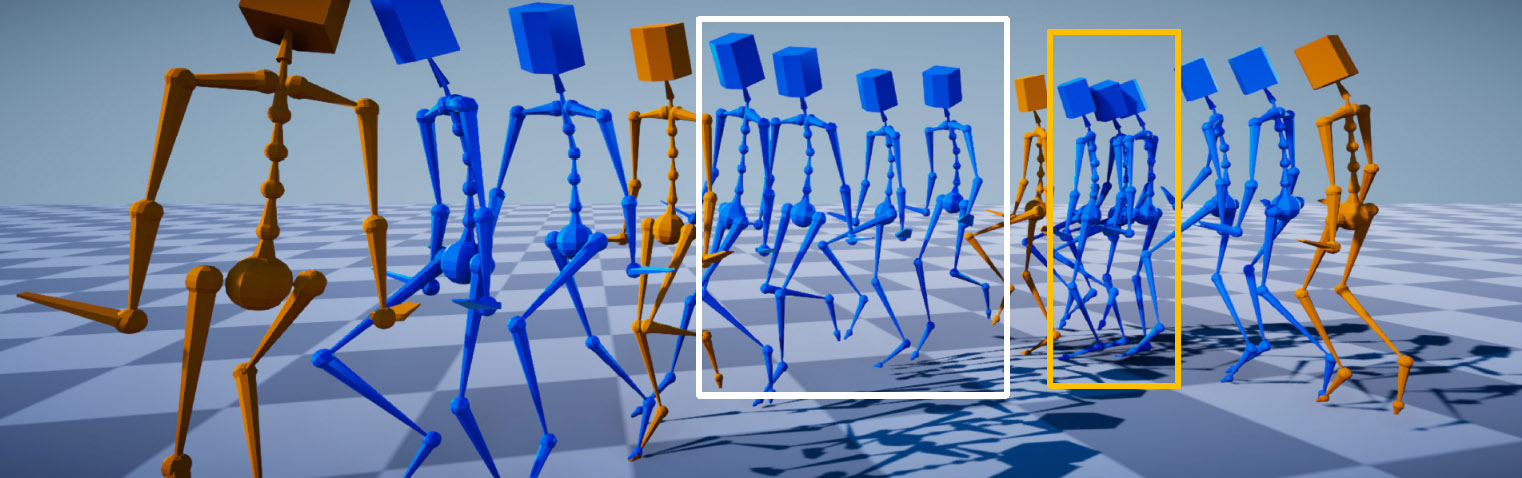

Given a target frame, a desired transition duration and the required style, the character can dynamically adjust motions to reach the target with the desired style. Specifically, the character in the white box needs bigger steps to reach the target within the specified duration, while the character in the orange box needs smaller steps to reduce the speed for the same reason.

Abstract

Styled online

in-between motion generation has important application scenarios in computer

animation and games. Its core challenge lies in the need to satisfy four

critical requirements simultaneously: generation speed, motion quality,

style diversity, and synthesis controllability. While the first two

challenges demand a delicate balance between simple fast models and learning

capacity for generation quality, the latter two are rarely investigated

together in existing methods, which largely focus on either control without

style or uncontrolled stylized motions. To this end, we propose a Real-time

Stylized Motion Transition method (RSMT) to achieve all aforementioned

goals. Our method consists of two critical, independent components: a

general motion manifold model and a style motion sampler. The former acts as

a high-quality motion source and the latter synthesizes styled motions on

the fly under control signals. Since both components can be trained

separately on different datasets, our method provides great flexibility,

requires less data, and generalizes well when no/few samples are available

for unseen styles. Through exhaustive evaluation, our method proves to be

fast, high-quality, versatile, and controllable. The code and data are

available at

https://github.com/yuyujunjun/RSMT-Realtime-Stylized-Motion-Transition.