Decoupling Contact for Fine-Grained Motion Style Transfer

Proceedings of Siggraph Asia'2024, Tokyo, Japan, 3-6 December, 2024, Pages 1–11

Xiangjun Tang,

Linjun Wu, He Wang, Yiqian

Wu, Bo Hu, Songnan Li, Xu Gong, Yuchen Liao, Qilong Kou,

Xiaogang Jin

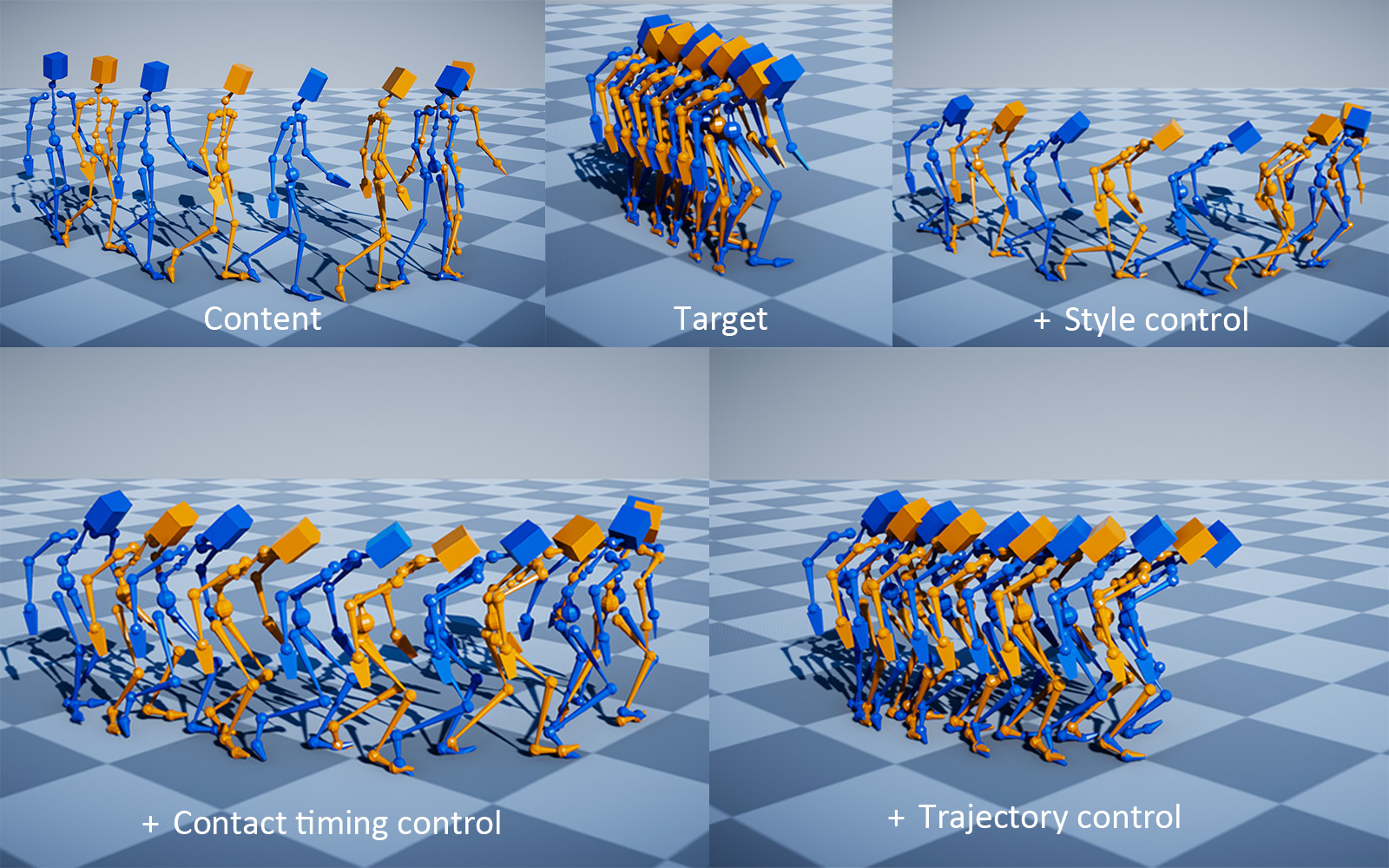

Our method can independently control style, contact timing, and trajectory, allowing for fine-grained motion style transfer. Given a content motion (a) and an "old man" style (bending, fast pace, and slow speed) target motion (b), our approach allows for the gradual addition of "style" (c), "contact timing" (d), and "trajectory" (e) of the target motion to the content, which previous methods could not achieve. The result in (c) depicts the target motion’s bending pose; the result in (d) depicts more frequent contact within the same time duration, indicating a faster pace of the character; and the result in (e) depicts a slower speed, precisely replicating the entire "old man" style. We show every frame in which either foot makes contact with the ground.

Abstract

Motion style transfer

changes the style of a motion while retaining its content and is useful in

computer animations and games. Contact is an essential component of motion

style transfer that should be controlled explicitly in order to express the

style vividly while enhancing motion naturalness and quality. However, it is

unknown how to decouple and control contact to achieve fine-grained control

in motion style transfer. In this paper, we present a novel style transfer

method for fine-grained control over contacts while achieving both motion

naturalness and spatialtemporal variations of style. Based on our empirical

evidence, we propose controlling contact indirectly through the hip

velocity, which can be further decomposed into the trajectory and contact

timing, respectively. To this end, we propose a new model that explicitly

models the correlations between motions and trajectory/contact timing/style,

allowing us to decouple and control each separately. Our approach is built

around a motion manifold, where hip controls can be easily integrated into a

Transformer-based decoder. It is versatile in that it can generate motions

directly as well as be used as post-processing for existing methods to

improve quality and contact controllability. In addition, we propose a new

metric that measures a correlation pattern of motions based on our empirical

evidence, aligning well with human perception in terms of motion

naturalness. Based on extensive evaluation, our method outperforms existing

methods in terms of style expressivity and motion quality.