Mob-FGSR: Frame

Generation and Super Resolution for Mobile

Real-Time Rendering

Proceedings of Siggraph'2024, Denver, 28 July-1 August, USA, Pages 1–11

Sipeng Yang, Qingchuan Zhu, Junhao Zhuge, Qiang Qiu, Chen Li, Yuzhong Yan, Huihui Xu, Ling-qi Yan, Xiaogang Jin

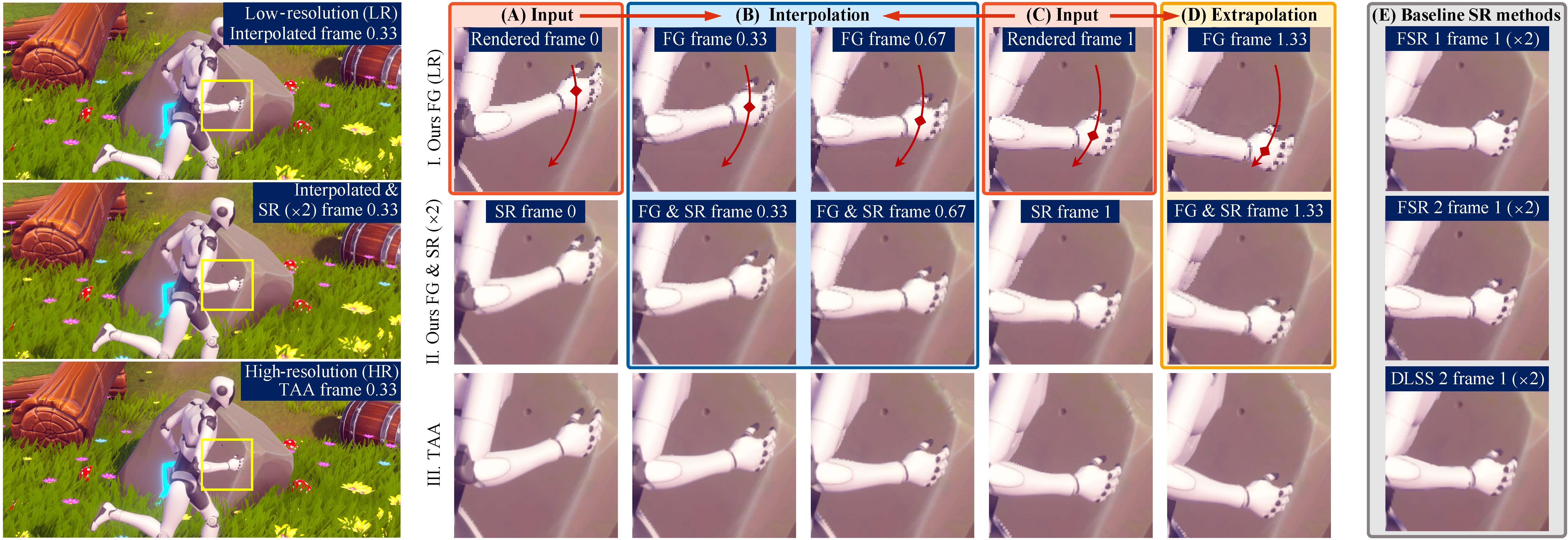

Frame generation (FG) and super resolution (SR) results of our method. Requiring only color, depth, and motion vectors from frames rendered at times 0 (A) and 1 (C), our approach efficiently produces interpolated (B) / extrapolated (D) frames and their SR counterparts at desired times. The left large images illustrate an interpolated frame (upper), its SR result (middle), and the high-resolution temporal anti-aliasing (TAA) reference (bottom). The right images show enlarged views of the yellow-framed regions of the interpolated frame (I.), their SR results (II.), and TAA references (III.), demonstrating our method’s smooth motion estimation and high-quality SR results. (E) displays ×2 SR results of commercial applications FSR 1, FSR 2, and DLSS 2. Experiments show that our approach achieves results comparable to the best of these solutions. Notably, our models have short runtimes, processing FG at 720P in 2.2ms and FG & SR at 1080P in 2.3ms on a Snapdragon 8 Gen 3 processor.

Abstract

Recent advances in

supersampling for frame generation and super-resolution improve real-time

rendering performance significantly. However, because these methods rely

heavily on the most recent features of high-end GPUs, they are impractical

for mobile platforms, which are limited by lower GPU capabilities and a lack

of dedicated optical flow estimation hardware. We propose Mob-FGSR, a novel

lightweight supersampling framework tailored for mobile devices that

integrates frame generation with super resolution to effectively improve

real-time rendering performance. Our method introduces a splat-based motion

vectors reconstruction method, which allows for accurate pixel-level motion

estimation for both interpolation and extrapolation at desired times without

the need for high-end GPUs or rendering data from generated frames.

Subsequently, fast image generation models are designed to construct

interpolated or extrapolated frames and improve resolution, providing users

with a plethora of options. Our runtime models operate without the use of

neural networks, ensuring their applicability to mobile devices. Extensive

testing shows that our framework outperforms other lightweight solutions and

rivals the performance of algorithms designed specifically for high-end

GPUs. Our model's minimal runtime is confirmed by on-device testing,

demonstrating its potential to benefit a wide range of mobile real-time

rendering applications. More information and an Android demo can be found

at: https://mob-fgsr.github.io.