BRNet: Exploring Comprehensive Features for Monocular Depth Estimation

Proceedings of the European Conference on Computer Vision (ECCV 2022), Tel-Aviv, Oct. 23-27 2022.

Wencheng Han, Junbo Yin, Xiaogang Jin, Xiangdong Dai, Jianbing Shen

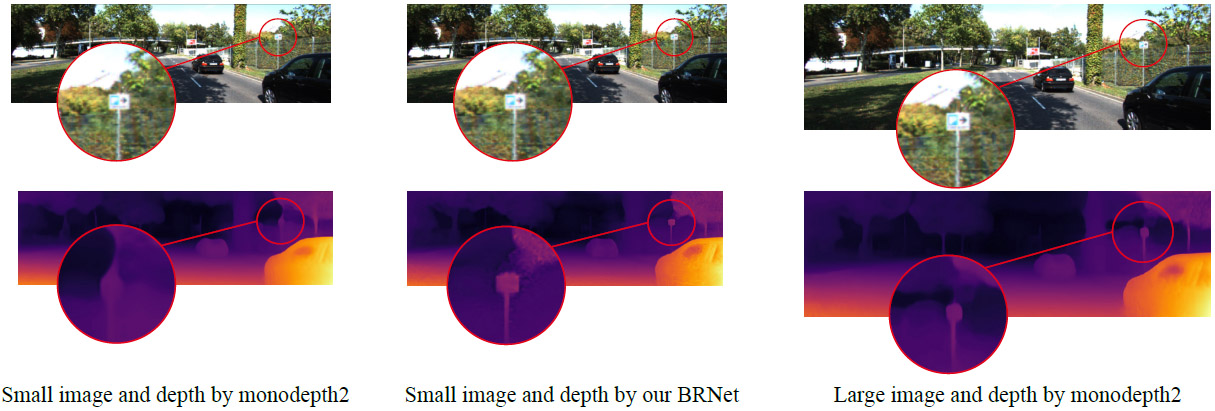

Predictions from monodepth2 and our BRNet with different input resolutions. The board in the images shows no obvious difference in the two resolutions, but the results of the monodepth2 differ substantially. This motivates us to explore the reason for the different predictions and devise a more suitable model of fully utilizing information in the images for depth estimation.

Abstract

Self-supervised

monocular depth estimation has achieved encouraging performance recently. A

consensus is that high-resolution inputs often yield better results.

However, we find that the performance gap between high and low resolutions

in this task mainly lies in the inappropriate feature representation of the

widely used U-Net backbone rather than the information difference. In this

paper, we address the comprehensive feature representation problem for

self-supervised depth estimation by paying attention to both local and

global feature representation. Specifically, we first provide an in-depth

analysis of the influence of different input resolutions and find out that

the receptive fields play a more crucial role than the information disparity

between inputs. To this end, we propose a bilateral depth encoder that can

fully exploit detailed and global information. It benefits from more broad

receptive fields and thus achieves substantial improvements. Furthermore, we

propose a residual decoder to facilitate depth regression as well as save

computations by focusing on the information difference between different

layers. We named our new depth estimation model Bilateral Residual Depth

Network (BRNet). Experimental results show that BRNet achieves new

state-of-the-art performance on the KITTI benchmark with three types of

self-supervision. Codes are available at:

https://github.com/wencheng256/BRNet.