A General Implicit Framework for Fast NeRF Composition and Rendering

The 38th Annual AAAI Conference on Artificial Intelligence (AAAI 2024), February 20-27, 2024, Vancouver, Canada.

Xinyu Gao, Ziyi Yang, Yunlu Zhao, Yuxiang Sun, Xiaogang Jin, Changqing Zou

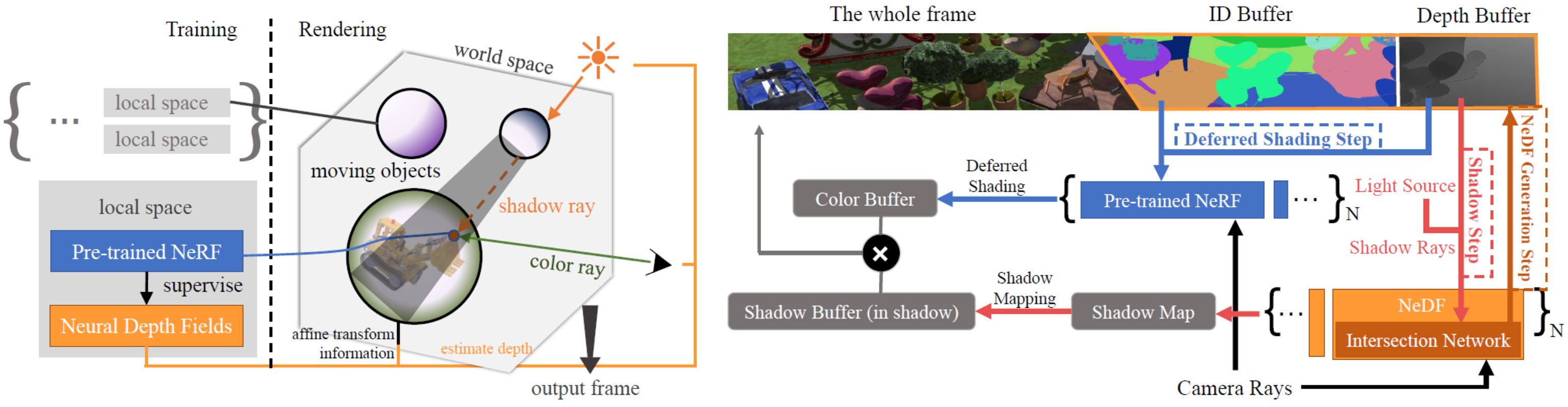

An overview of our framework (left) and data flow (right). In terms of framework, we use Neural Depth Fields (NeDF) as the representation of the implicit surface within each object’s local space during training, under the supervision of pre-trained NeRF. There are three main steps in render time: 1) Step1 is ”NeDFs generation step”, depth buffer and ID buffer are created by a collection of NeDFs; 2) Step2 is ”Deferred shading step”, those 2 buffers are then used by a collection of NeRFs to fill a color buffer with no shadows; 3) Step3 is ”Shadow step”, shadow rays are generated based on the depth buffer and NeDFs are used again to provide a shadow map. Finally, the color buffer and shadow buffer are multiplied to produce the results.

Abstract

A variety of Neural

Radiance Fields (NeRF) methods have recently achieved remarkable success in

high render speed. However, current accelerating methods are specialized and

incompatible with various implicit methods, preventing realtime composition

over various types of NeRF works. Because NeRF relies on sampling along

rays, it is possible to provide general guidance for acceleration. To that

end, we propose a general implicit pipeline for composing NeRF objects

quickly. Our method enables the casting of dynamic shadows within or between

objects using analytical light sources while allowing multiple NeRF objects

to be seamlessly placed and rendered together with any arbitrary rigid

transformations. Mainly, our work introduces a new surface representation

known as Neural Depth Fields (NeDF) that quickly determines the spatial

relationship between objects by allowing direct intersection computation

between rays and implicit surfaces. It leverages an intersection neural

network to query NeRF for acceleration instead of depending on an explicit

spatial structure.Our proposed method is the first to enable both the

progressive and interactive composition of NeRF objects. Additionally, it

also serves as a previewing plugin for a range of existing NeRF works.